So, the first thing we need to do in any discussion of AI, is to talk about the very term, "AI", artificial intelligence, because that term is itself half the problem. The term "AI" can sometimes trick people into thinking that these algorithms are doing something very different than they are actually doing, and therefore that as they "get better", they will become much more powerful than they are now.

The algorithms which are currently referred to as "AI", are not intelligent. By this, I do not mean that they are stupid; they are not even stupid. They are just algorithms. They have, quite literally, no idea what they are doing.

The reader might be forgiven for thinking that I am talking about some version of "the zombie problem", in which we wrestle with abstruse philosophical issues about how anyone can truly know that somebody else is sentient, and not just a mindless zombie that is imitating human behavior without sentience. If we ever manage to create AI, that will be a tough problem, and one with many ethical and even existential consequences. But that's not something we have to deal with now, because the current crop of "AI" is not even stupid.

To illustrate, let us consider the now famous "game of chess" between ChatGPT and Stockfish (comedically narrated by GothamChess here). Now, Stockfish does include a neural network element, which in recent versions replaces entirely the board evaluation element (the other two principal elements, board representation and heuristic tree search, stll use non-neural network architectures). But, crucially, Stockfish does (all "zombie problem" concerns aside) know that it is playing chess.

That is, Stockfish has a representation of the (chess) world in its mental state, and that representation includes things like the rules of chess. Stockfish does not "hallucinate" in the way that ChatGPT does (about chess and everything else), because Stockfish is purpose-built for chess playing. It knows, in the literal sense, what it is doing. It can evaluate whether or not a potential chess move is legal, or desirable. It has a good deal of code devoted to that.

ChatGPT, on the other hand, does not. Large language models such as ChatGPT do have purpose-built code architectures related to vocabulary ("tokenization" being the term for converting words into tokens of meaning, not quite on a 1:1 basis), that allow it to determine that "May" in "May the Force Be With You" and "May 1st is May Day" do not refer to the same token of meaning (whether it can handle "May The Fourth Be With You" on Star Wars Day, May 4th, is an interesting question but let's not get distracted).

Large Language Models (LLMs) can, in some sense, be said to be aware that they are using language. So, when Stockfish and ChatGPT talk to each other in chess notation, Stockfish knows that it is playing chess (but perhaps not that it is communicating about it), whereas all that ChatGPT "knows" is that it is talking to someone. It not only doesn't know what it is talking about, it doesn't know that it is supposed to.

All that LLM's can do is language, because that is all they were programmed to do, and waving a neural-network magic wand over them doesn't change that fact, and that is true no matter how large the neural network becomes.

In real life, if we are talking to somebody, and they know a lot of vocabulary related to the field, we have an expectation that they got that large vocabulary by reading (or talking about) that topic a lot. This also means, they probably spent a lot of time thinking about that topic. This means there is a good chance that they know something about it (although we have probably all run into the exception, the person who throws out a lot of vocabulary related to a topic without actually knowing much about it). In other words, they have probably spent a lot of time building up a mental representation of the topic in question, which they can use to make sure that what they are saying is true. They can lie, if they want to, but at least they will know that they are lying, because they know what they are talking about.

LLMs, though, do not attempt to construct a representation of the world in their neural network. All they do is what they were programmed to do, and that is just to say stuff that sounds like what other people say. It is really good at language, in the way that Stockfish is good at chess, and for the same reason: humans programmed it to be good at language. It doesn't even fail to construct a representation of the world; it never attemps to, and that is because we didn't program it to.

Humans did not program it to be intelligent, because we don't know how to do that. We also almost certainly won't, in the lifetime of anyone alive as I am writing this.

Why, then, do we see so much hype around "AI"? Well part of it is that it is good at language, and that fools us because humans who we meet who are good at language, are often (though not always) intelligent. But, there is a second reason, and that's what I want to talk about here.

When companies with a lot of money, invest that into a field, we naturally tend to assume that they see the prospect of making a lot of money there. Sometimes it doesn't work out (see self-driving cars), but at least their motive is clear. Google, Microsoft, and Amazon all seem to be investing a lot in "AI", so we mostly assume they think it will pay off. But that isn't really what's happening here, I don't think.

Quite obviously, I cannot predict with any certainty what will happen with the stock prices of these (or any other) companies, and you shouldn't take this as any kind of financial advice to bet your savings on. But I believe what is going on here is a shell game.

The dirty little secret of "cloud computing", is that a lot of it (from 2008 until recently) was wasted. Software startups focused on growing, as fast as possible, in order to gain a huge user base that could make them an acquisition target. The phrase "throw hardware at the problem" became, well, a phrase, a typical and widely applied approach to any problem. When presented with a choice between spending time to figure out how to use their computer resources more efficiently, or just double their AWS (or Azure or Google Cloud) bill, they chose the one that was quicker rather than the one which was cheaper. This was because they cared about time more than money, in large part because interest rates were very low.

Once the era of easy money was over, it became time to figure out how to become profitable. Also, if you've already grown to about as large a user base as you can reasonably expect to get in the near future, it is time to trim your expenses. This has resulted in a lot of companies working, in the last couple years, on ways to reduce their cloud compute bill. Moreover, there is no longer a steady stream of new software startups, each ramping up their cloud compute bill as they spend their way through easy VC money. With existing big customers now working hard to reduce their expenses, and no supply of new customers to take their place, cloud computing became more like a commodity, with a shrinking market.

But wait, you ask, aren't the big cloud providers still talking about robust business? Yes, they are, and all of them because of "AI" customers.

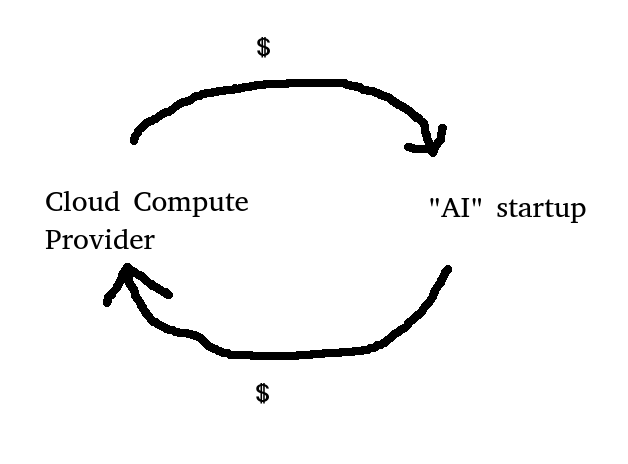

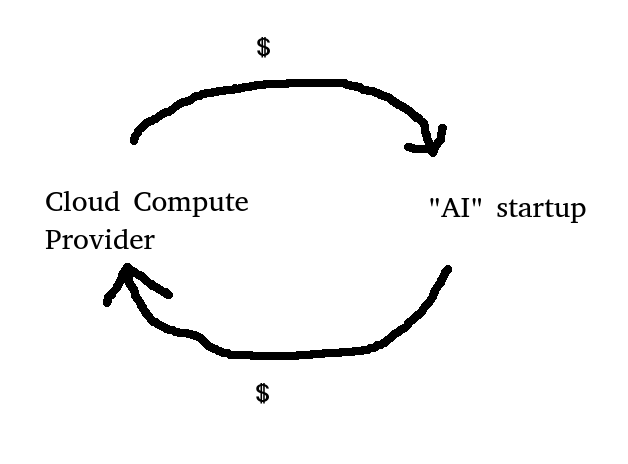

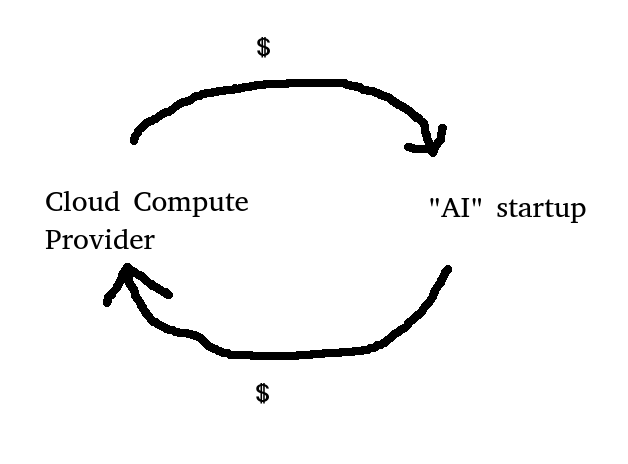

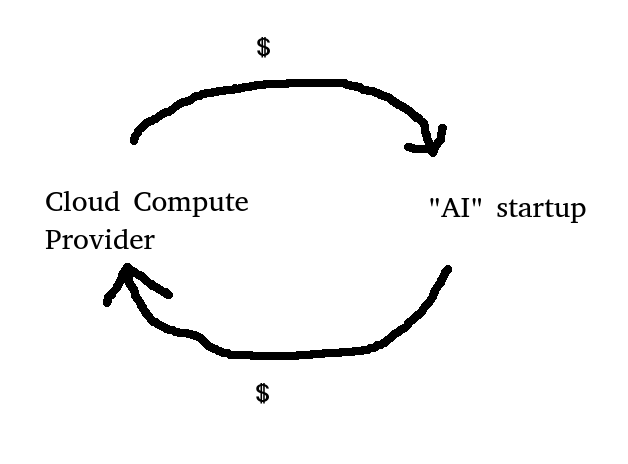

Nothing wrong with that, you might think. Money is money. Well, it is, unless you had to give the "customers" the money, that they gave back to you. Then it's more like printing "100% off" coupons, which you hand to customers so they can hand them back to you, and then congratulating yourself on how much business you've got.

Not long ago, VC's began to complain that the hot new AI startups were hard to get a piece of, because the Big Tech companies were all overpaying for them. The poster child for this is Microsoft's investment in OpenAI, the company behind ChatGPT. However, we are told that the great majority of the "investment" is not dollars, but rather credits for Microsoft's Azure cloud platform.

So, what is the difference between these two scenarios:

The difference, is in what it sounds like to shareholders. Scenario 1 sounds like you are following the typical path for large tech companies, turning from a fast-growing startup with new products into a slow-growing company with commodity-like profit margins. Scenario 2 sounds much better.

Of course, Scenario 2 turns out very differently if the AI companies you've "invested" in all end up being profitable. So, how will we know if the LLM-based "AI" companies like OpenAI will turn out to be profitable? Fortunately, we have an experiment to give us empirical evidence, that is taking place as we speak.

Microsoft has been an early(ish) investor in OpenAI, and has a great deal of influence over it. When the board of OpenAI tried to replace its CEO, Sam Altman, Microsoft was able to veto this. ChatGPT actually runs on Microsoft servers. We can safely assume that Microsoft has access to the latest and greatest of what OpenAI has to offer. If ChatGPT (or any related product) can actually have real-world, productive impact, then Microsoft should be the first beneficiary of this. If LLM's are not just BS-as-a-Service, but rather offer real-world productive impact, then we should very soon see a burst of unusual, even unprecedented productivity from Microsoft.

If we soon see Bing get market share from Google search, or Microsoft manage to get back into the smartphone OS game, or some other new product come out of Microsoft that sells well and impresses us in a way that Microsoft has not done in years, then that will be evidence that LLM's such as ChatGPT are actually worth something. That would imply that the companies that make them will, even after the hype dies down, be worth something.

But if a year from now, Microsoft still doesn't seem to be any more able to produce impressive software than they were before they invested in OpenAI, we won't have to debate whether or not LLM's are "for real", because we'll have real world evidence. Given that they made a $10 billion investment in OpenAI in Jan 2023, arguably we should have seen something already, but these things take time. Still, the clock is ticking; by Jan 2025 we should see something if there is anything to see. I am not holding my breath.